Submitted by Salil Mehta via Statistical Ideas blog,

Here we go again, about a week before the next U.S. presidential election. The President Obama era, whose administration I meaningfully served >3 years as a public change-agent, will soon give way to either Hillary Clinton or Donald Trump. 15% of the country remains undecided, and looking to polls the same way hundreds of millions of others are. Will it be a certain landslide? We’ll get to that in a moment. This article will explore the biases (i.e., purposeful errors) from the national pollsters. We’ll see where they repeatedly have gone wrong, not just this year, but in prior elections. The arguments we’ve made throughout this month, and read by >2 million and shared by thousands, that Trump indisputably has a >20% probability remains even as the other pollsters are only now coming around to our original assessment. But we’ll also see, or you can as well since we freely provide all of the raw data here, that a 5-point lead by Hillary (even if believed) is merely a central point where the actual results on November 8 can vary wildly on either side and leave most pollsters horrifyingly wrong this year.

To many, a 5% lead in the polls seems like such a sure thing. But of course in other aspects of life (roulette, weather forecast, stock markets, etc.) we know that’s slim. This year particularly we have two twin forces wreaking havoc on normally decent pollsters: two 3rd-party candidates and a sizeable number of independents, and highly biased pollsters creating outlier views on Trump. Most of the “probabilities” for a Trump winning were settling at ~10% until recently (here), though we have long argued (here, here, here) that such probabilities are too low. We’ve refreshed the chart shown here that because of the Access Hollywood video leak, Trump’s probability for winning was halved from in the 40’s%, to ~20%. And that’s it! It’s low, but it is twice what is popularly mentioned in mainstream media kept insisting and in reality we are looking at a 1:4 chance. So buckle up. 1:4 chances don’t never happen!

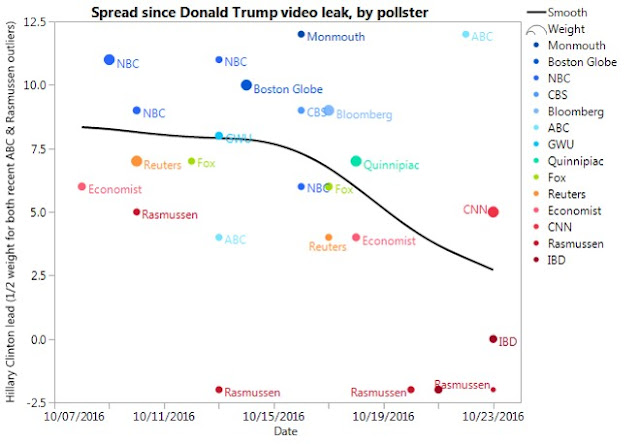

Also see below how the pollsters viewed the “spread”, here defined as a percentage-point Hillary lead.

Let’s take an up close look at how the developing trend in pollster data impact low-frequency pollster data. One can’t simply take high-quality averages each day, particularly since the exogenous impact of the video leak earlier this month. What we do see though is what we’ve long argued would be a re-tightening of spreads, and in fact this is corroborated across a number of other statistics. This is no time for anyone to be unworried since there is still an election to be had! For this particular chart it is worth noting that the weight of the data is based on the frequency and recency of the poll, as well as a reduced weight given to the notable outliers of both ABC as well as Rasmussen. And to be fair, there are others. We should note that such probability adjustments didn’t change our overall conclusion that the spreads have considerably narrowed, though still remaining above the levels from before the video leak. But momentum here in on Trump’s side.

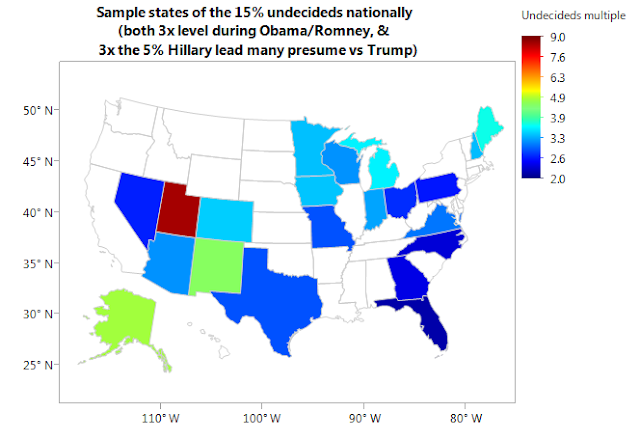

Before slicing and dicing the historical polling data, it is worth looking at the amount of undecideds at this point in the campaign cycle. They add considerable risk to pollster’s views, whether they acknowledge that or not. We have nearly 20 million undecided voters right now, or 3 times the level at this time last election (Obama versus Romney). These undecided voters are also not homogenously spread across the U.S.! See the map below and you can appreciate some real wild-card locations. Now the portion of undecideds collectively is still about 15% for the U.S., and as a reminder that implies 3 times the noise relative to the presumed signal of a 5% Hillary lead over Trump. And these undecideds are included in large numbers, in must-win states such as Texas, Florida, and Ohio!

Now let’s dive into the data from national pollsters and scrutinize patterns in the errors. Of course data and accessibility of current and historic research is difficult to come by so we developed a large dataset so that this information is now available to you, including on metrics such as reported margin of errors and demographic samples! We look at 13 chief pollsters who also have a decent amount of polling history to create accuracy measures from. Now see below a simply chart for each of the pollsters and their current “spread” sorted on the left, versus their historic bias (percentage points by which they have historically overestimated liberal/democratic candidates).

The evidence is fairly straight. IBD and Rasmussen are clearly showing the most favorable results for Trump, and both generally show polls that are GOP-biased by about 2 percentage points. On the other extreme we see some erratic noise. A traditionally conservatively biased Monmouth last conducted a national poll a couple weeks ago, and that showed Hillary up by 12. Are you factoring that in, because that won’t last! Yet ABC and USAToday fits a pattern of being more liberally biased, both with a ~9-point Hillary lead; and of course both are democratically biased by about 1 percentage point. The difference between the ABC and USAToday spreads, and the IBD and Rasmussen spreads, is gigantic and we’ll show the implications of that further below.

Now as a theoretical probability website, we’ll have to take this much deeper. We start with the popular variance decomposition of the predictive errors using the following formula:

prediction error = bias2 + variance of errors

We highlight though that this implies that all pollsters have the same parameters, mostly the same bias. But they don’t per above, and hence we will need to make modifications in understanding the true distribution of pollsters’ spread, as we go further along. Just the basic formula above shows the need to look at two lower-order errors. The bias, but also the variance in both the model and errors as well! So let’s see in the chart below, this same list of 13 pollsters but also plot their typical distribution of absolute errors. In other words, Nate Silver’s FiveThirtyEight (538) may appear to be in the middle of the pack on the previous chart, in both their current spread (just over 5%) and historic bias (generally just over 1% leaning conservative). But in the chart below (in yellow) we see that 538’s advertised margin of error is 4 percentage points (in either direction), though historically (in red) they have been a lot closer to 2 percentage points. In other worse we can expect that instead of their 5 point spread for Hillary, using just recent history for 538’s biases alone, the true spread is generally between 4 and 8 points (2/3 of the time), and if using their current margin of error, we would have a wide range from 2, to 10 points (again 2/3 of the time). However, for our work we’ll need to consider all pollsters collectively, including their correlations with one another. And hence at the end of this article we’ll look at a proper bootstrap distribution that looks at the real errors made by pollsters historically, as well as the wide dispersion in biases that these different pollsters continuously bring to the table in recent elections.

Now putting this together with the formula above, we can now use machine learning to recognize the factors that help differentiation the high-error pollsters from the low-error pollsters. We see that the amount of absolute error (which we show above), and even the volatility in the same, is less informative versus simply looking at the bias of the pollster to begin with.

What the illustrated output above shows is the basic clustering of 13 pollsters (into high-error and low-error) can be best done by segregating only the polling bias itself! The pollsters within the columns below are not sorted in any meaningful way. The point is to expose the insight that the generally more liberal the pollster is, the higher their errors tend to be. This is something to be mindful of when aggregating polls of polls, over any length of history.

So now let’s look at trying to normalize the pollster data using probability formulas. First we show the pollsters historical bias relative to their current spread (in green). Next we will show how such adjustment for bias would make the current spread appear (in red). And therein lies one of the issues with this year’s polls. The polls this year are so damaged that even correcting for historic bias leads to exaggerated polls with very high variation among them! Also note that the chart had been enhanced by the weight/size of the pollster in the chart reflecting how historically accuracy that pollster is. One will notice NBC and HuffingtonPost for example, despite other flaws with the polling, with among the largest data markers and hence accuracy weights, in being balanced over time on their predicted spread. The other pollsters certainly have work to do to be more correct, particularly Monmouth as they are single-handedly creating distortions in what would have been a fresh upward trend in the top chart below!

We do the same thing now for the pollster’s variance in bias error, again assuming no expected difference in bias. We see that generally the variation in errors has been high, even within pollsters such as NBC or Fox, who advertise that their polls as having far less margin of error than they do. Note that NBC is less biased in their spread though, as shown earlier, but the precision around their process in any given year is quite rough as we will see below. So this is the result of being lucky for this pollster. We see here as well, in conjunction with the chart above, that Rasmussen appears to be as badly behaved as ABC, though both are expressive outliers in different directions. And organizations in the chart below, such as Princeton and CNN, that are showing high errors have still undoubtedly underestimated their actual error given how far off from the typical spread they are this year.

In the ultimate analysis of these pollsters, we want to do more than develop theoretical parameters to balance the spreads among pollsters. So we developed a fresh and non-parametric bootstrap approach (a technique named after the self-reliance of military troops trudging through mud) in order to assess the true distribution of a spread (of the average of the polls). Recall simply thinking the polling average is 5 points for Hillary is erroneous. This is because the pollsters themselves are merely offering samples of their distorted reality. Better realities could be developed using merely insights from all of the pollsters, which would be closer, and not limited to any one pollsters’ range (though that range is so large for 2016 that the lower of the two distributions below reflects the probability of the November 8 outcome itself!) We first run over a thousand simulated spreads below, noting that any of these spreads could be the correct expected value and the actual election can still be within a certain margin of error about this. Even prior to that though, notice the high kurtosis (the risk in fatter tails per here, here, here) versus the normal distribution below (in red)?

Second each spread value, including the smallest one simulated at +2.6% lead for Hillary, still has a 2-3% margin of error expected. But in the formula we showed above, the actual bias will have to have larger variation still and that’s taken into account only in the latter of the two charts below. In other words, use this distribution as a guide for what’s going to happen this year. The probability of a Trump victory is still above 20% (and unquestionably not zero). Pollsters such as Monmouth and others are now going to aggressively play catch-up, in Trump’s favor! And in any event, this year is proving itself to be unusual simply by observing how wild these pollsters have gone. Recall the blue line Pollsters’ bias chart above not being flat but still at a steep angle proving the difficulty of even averaging pollsters this year.

This lastly, aligns with our ongoing concern that polls this year have been less accurate than normal and hence not as wise to depend on them. The accuracy can fluctuate (and they will, given the randomness these statistics intrinsically provide). We’ve had a string of better than average polling results before so kudos to those professionals. Though that performance in their skill will come under pressure this year. Like in any analysis, don’t be overconfident that these polls are benign, which would jointly be perilous for voter turnout. Academics (such as friend Professor Gelman) we show on links above already state the margins should be nearly twice as large, and Betfair trade noted has already doubled in value in just a couple weeks! The bottom line is that we'll see many pollsters wrong this year for sure, and while there is a 20% or so probability for a Trump victory, there is also an equally fat-tail 20% or so chance for a landslide blow-out from Hillary (something beyond what we saw for Obama versus McCain in 2008!) Not a sure-thing there, either.

The post Pollsters Gone Wild: “Buckle Up, 1-In-4 Chances Don’t Never Happen” appeared first on crude-oil.top.